Goodreads → SQLite

Books! Disappearing into books is great, and I have well-founded suspicions that it's occasionally the only thing that keeps me fit for human company. I like to share my opinions of the books I read – mostly so that I can look it up in a couple of months or years, but also for the small-but-steady troupe of interested people. This usually takes the form of my monthly book blog post – but now, you can see the raw data, too!

It used to be that I dreaded this monthly blog post, because … well, I read a lot. Sitting down and writing this blog post would usually take at least two hours, often up to twice as long. Not terribly enjoyable. So I decided to automate this tediousness, and since then the blog post is generated from reviews that I write on Goodreads once I am done with a book. Of course, I still take some time to edit and arrange the generated document, but that's more in the ballpark of thirty minutes than four hours.

So when Simon Willison, author of datasette and a bunch (what's the collective noun for open source software?) of other cool things, wrote about his Dogsheep project (a bundle of tools for extracting data from various platforms into SQLite databases), I naturally had to write something like that for Goodreads data.

Je vous présente: goodreads-to-sqlite. You can install and use it like this:

pip install --user goodreads-to-sqlite goodreads-to-sqlite auth goodreads-to-sqlite books goodreads.db

If you care about the “read at” date, append the --scrape option to the last command (and if you're interested in

the reasons, read on). You'll find a complete SQLite database of your public Goodreads shelves in the goodreads.db

file. you can also add the user name (or user ID or just the profile URL) or another person to the command, to download

their public reviews.

Using the data

What can you do with this database? What can't you do with this database! First off, you can look at my reviews and only look at books I gave a five-star rating. But more interestingly, you can find out more about your reading behaviour (or, well, mine at least):

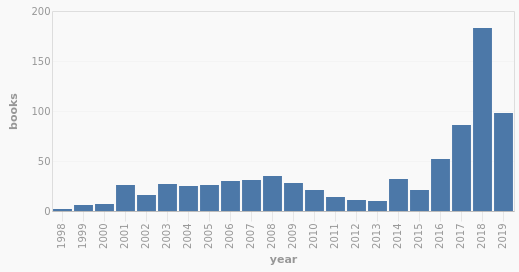

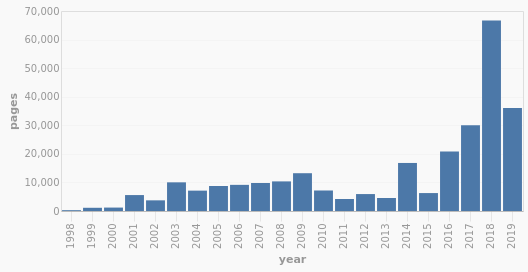

This query shows how many books I read per year, and this one, how many pages per year. Because datasette is cool like this, it also gives me charts for free (fun fact, apparently I read 66666 pages last year):

Of course, I can do way more than just looking up whose books I like to read, and correllate page numbers with months and similar backward-looking shenanigans. I can also import the reviews of those of my friends who have their reviews public, and figure out which books they liked, so that I can look into them. (Yes, you'd expect that a website like Goodreads is good at something like this. It isn't.)

I'm not particularly good with SQL, so here is the somewhat brute-force solution that takes quite a bit to run:

select books.title, count(reviews.id) as review_count, avg(reviews.rating) as average_rating, sum(reviews.rating) as total_rating from reviews join books on books.id = reviews.book_id join reviews_shelves on reviews.id = reviews_shelves.reviews_id join shelves on reviews_shelves.shelves_id = shelves.id join users on reviews.user_id = users.id where reviews.rating > 0 and shelves.name = "read" and users.name != "rixx" and not exists ( select 1 from reviews as inner_reviews join reviews_shelves on inner_reviews.id = reviews_shelves.reviews_id where inner_reviews.book_id = reviews.book_id and shelves_id = 136674318 ) group by reviews.book_id order by total_rating desc limit 50;

There's no online demo of this, because I'm not comfortable uploading the reviews of other people, even if they are public on Goodreads. For one thing, they didn't sign up for that, and they could also just set them to be private or change them and the updates would not reach me. Here are some of the top results of the query above for me, though:

| Title | Reviews | Average score | Total score |

|---|---|---|---|

| The Stand | 6 | 4.0 | 24 |

| Pride and Prejudice | 5 | 4.2 | 21 |

| What If? | 4 | 4.75 | 19 |

| The Once and Future King | 5 | 3.8 | 19 |

| Watchmen | 4 | 4.5 | 18 |

| The Picture of Dorian Gray | 4 | 4.5 | 18 |

| The Curious Incident of the Dog in the Night-Time | 4 | 4.25 | 17 |

| To Kill a Mockingbird | 4 | 4.25 | 17 |

| Of Mice and Men | 4 | 4.25 | 17 |

| One Hundred Years of Solitude | 4 | 4.0 | 16 |

| The Joy Luck Club | 4 | 4.0 | 16 |

| To Say Nothing of the Dog | 4 | 4.0 | 16 |

| CryoBurn | 4 | 4.0 | 16 |

| Nine Princes in Amber | 4 | 4.0 | 16 |

Implementation

Goodreads is a social media platform targeted towards readers and authors. Since it was acquired by Amazon (of course.) five years ago, it has lived a popular and assured, yet sad life – the platform has seen few changes since the acquisition, and the API is nothing to write home about. And yet: despite its aging and slow interface, it is the most used place to gather and share what books you are reading, and what you think of them.

The implementation is for the most part straightforward: Goodreads does have an API, and I use only the parts that don't require OAuth, which only requires an authentication token with every request. The annoying database interaction parts are run via the excellent sqlite-utils which make the whole thing as easy as can be.

The only real hitch was that the Goodreads API is missing some data some of the time: Depending on how you set a book as "read", the "read_at" date will not be shown in the API, even when it is visible in the web interface. Since this issue has been around for over a year, and API development of Goodreads has been dead for much longer than that, hoping for an upstream fix was not a good solution. Luckily, though, there is something I could do:

Usually, you retrieve reviews from a URL like "/review/list/{user_id}.xml" (with a couple of additional parameters to

sort, filter, and paginate the resulting list). But there is another API! If you drop the ".xml" suffix in the URL,

you'll get basically the same data, just in an XML format that is not quite standard and contains a bit more noise.

Other than that pretty much the same as in the official API. If you add the --scrape flag to the script, it will

fill in the missing data from these pages. Of course, this will take a bit longer, but it has proven to be very

reliable.

If this sounds interesting, try the script yourself, or play with my datasette a bit. Since datasette is very useful for that, I may post a short tutorial on how to set up your own datasette instance in the next days, if there's some interest.